Delivery versus Intelligence Consumption: Part 3

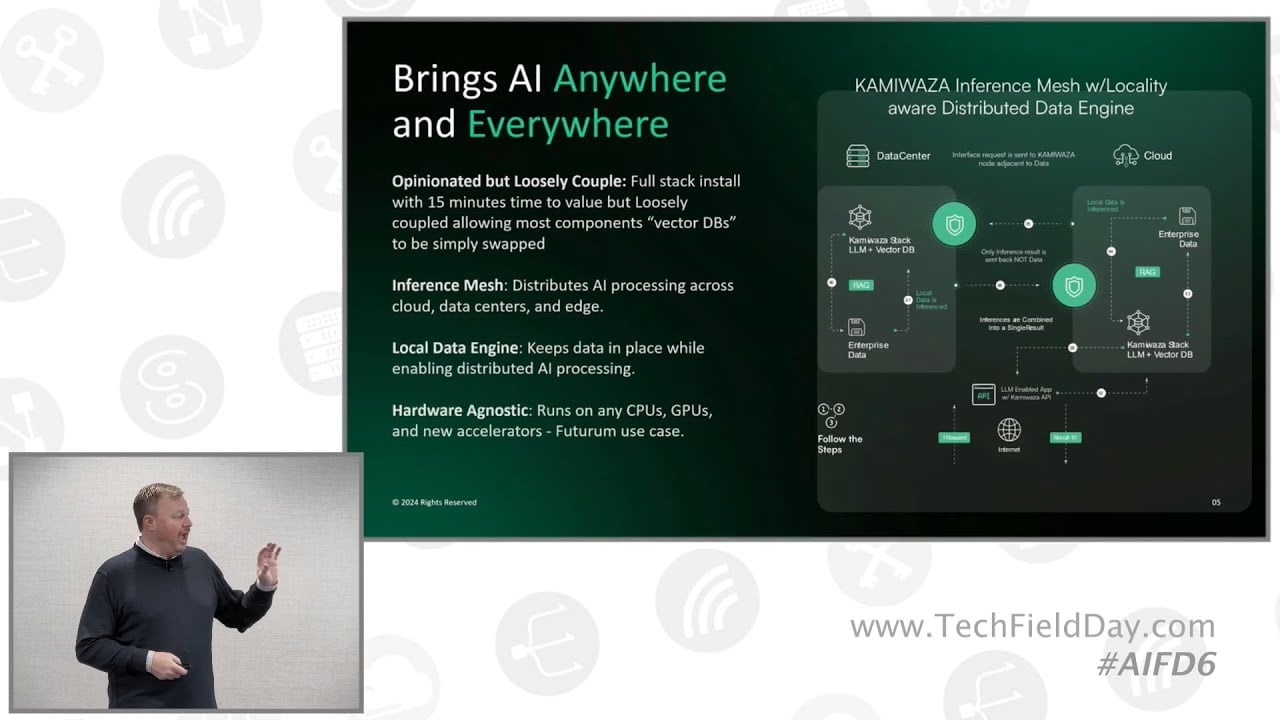

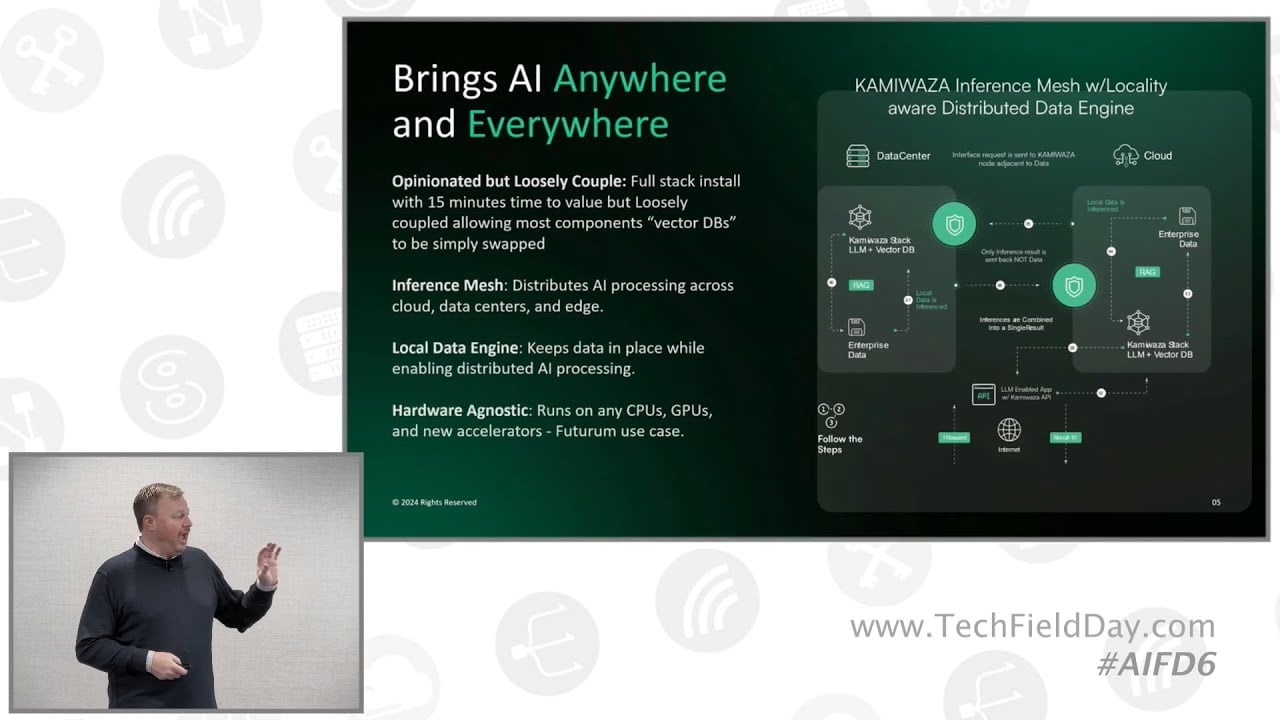

How Kamiwaza delivers intelligence ubiquitously, securely, and at enterprise scale In ...

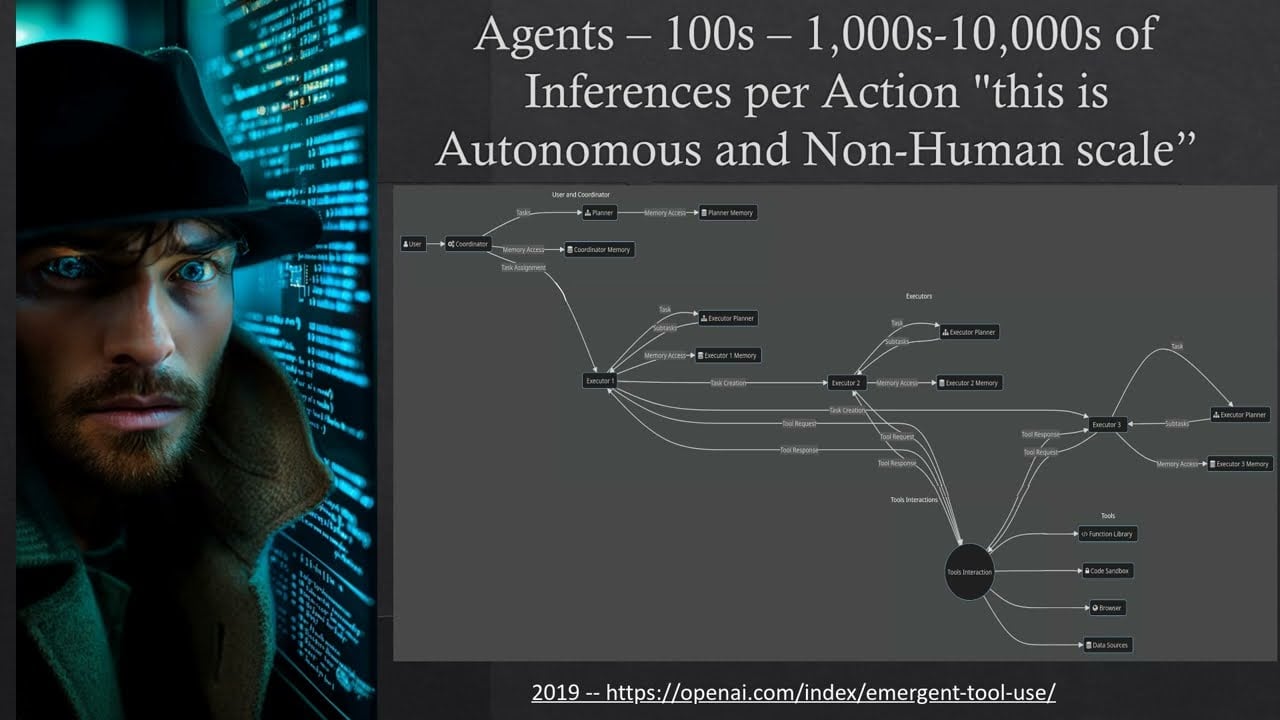

Create intelligent agents and automated workflows across your distributed data infrastructure without compromising security.

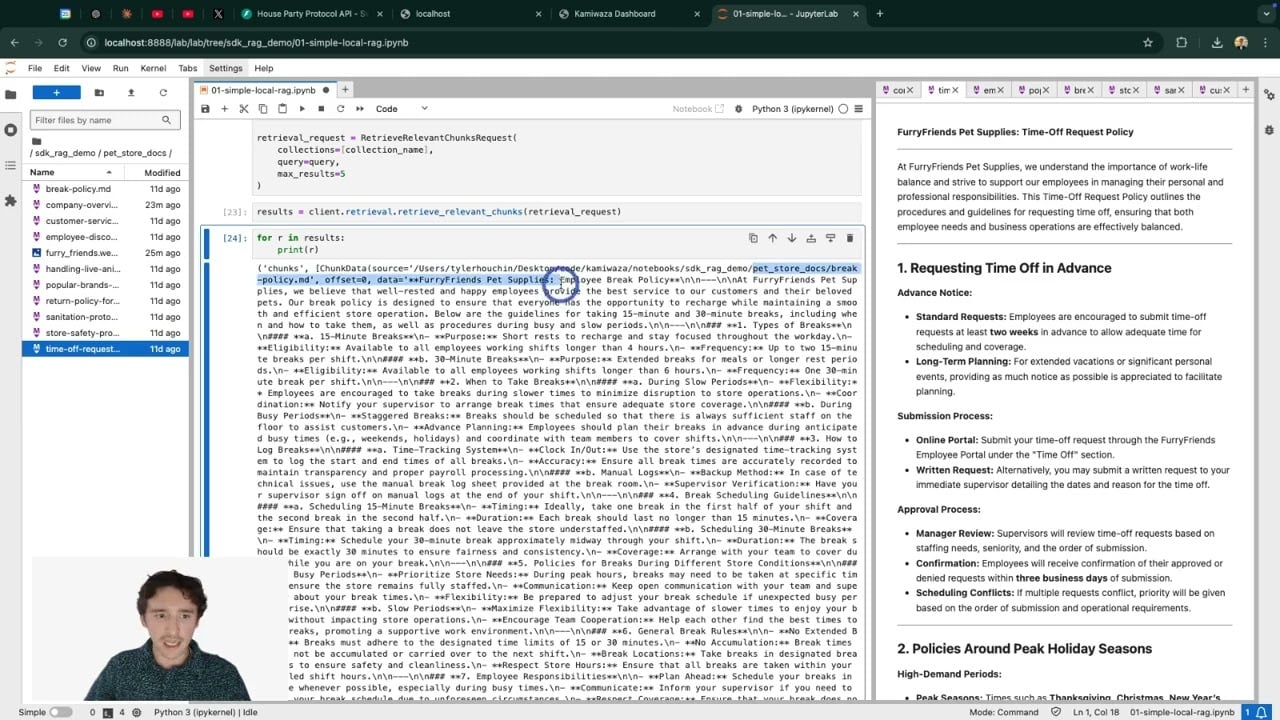

Access and process data across any system without data movement or compromising security

Coordinate AI workloads globally across cloud, on-prem, and edge using a silicon-agnostic engine, directing inferences to the most efficient locations.

A unified graph engine powers agents with attribute-driven, ephemeral permissions, delegation chains, and full auditability for 5th Industrial scale Contextual Authorization.

Automatically discover and map entity relationships across your entire data estate to create living knowledge graphs updated in real time

Trusted by

ARIA (Accessibility Remediation Intelligence Agent) identifies accessibility issues and produces compliant, review-ready updates at scale.

ARIA is one of several Smart City solutions developed with HPE and NVIDIA.

See why 70% of GenAI projects fail and how Kamiwaza solves the enterprise AI challenge by deploying intelligence across distributed environments—hardware agnostic and leveraging to your existing infrastructure.

Where traditional AI demands compromise, Kamiwaza delivers results. Handle your most valuable and sensitive processes with AI that respects security boundaries while automating what matters most.

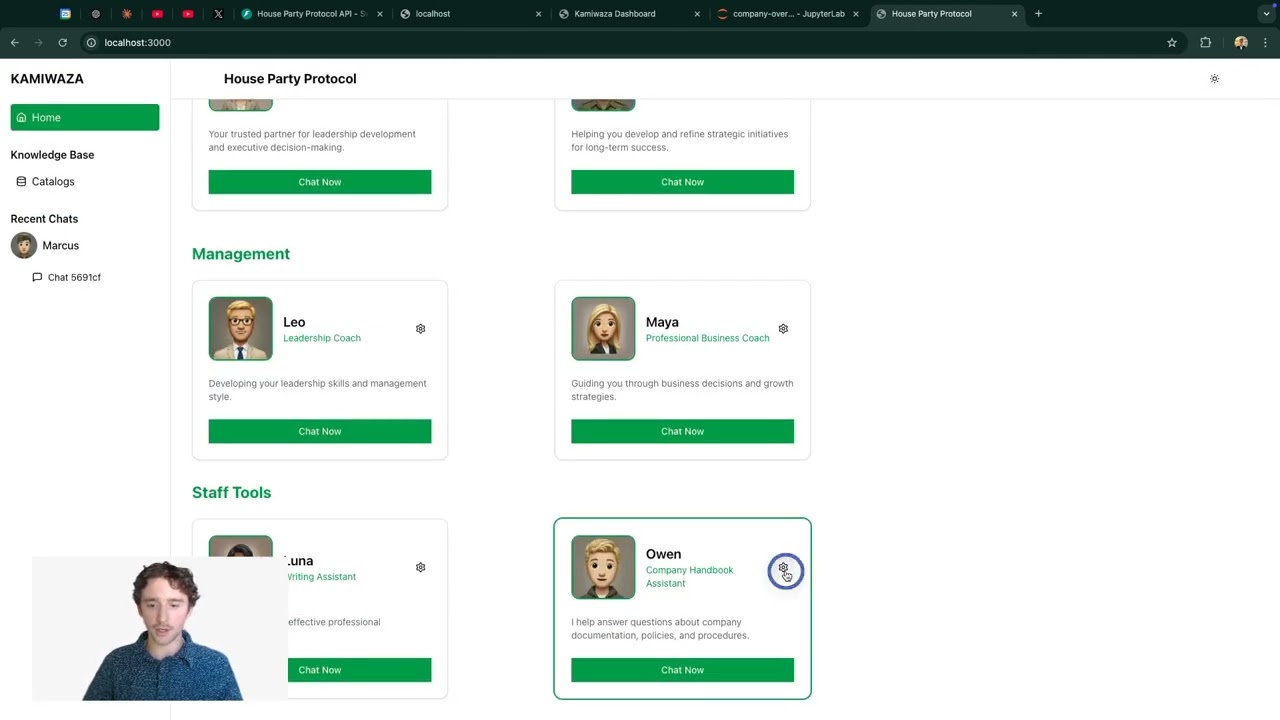

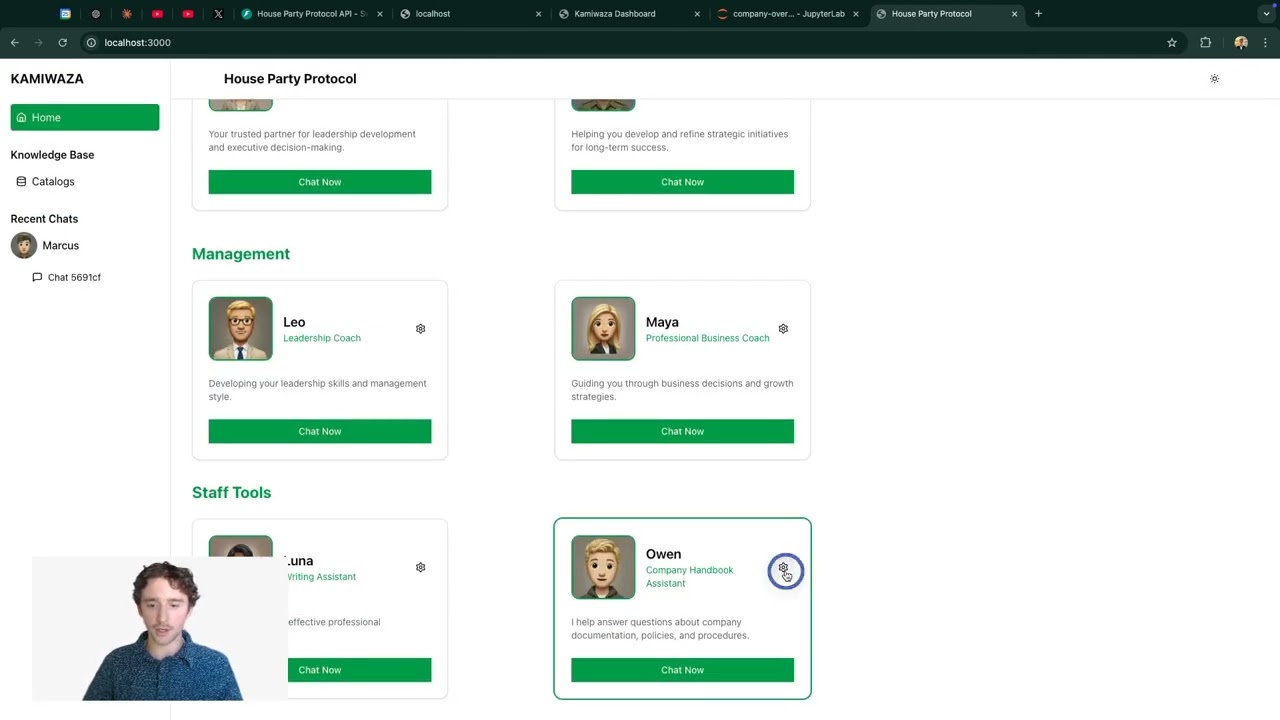

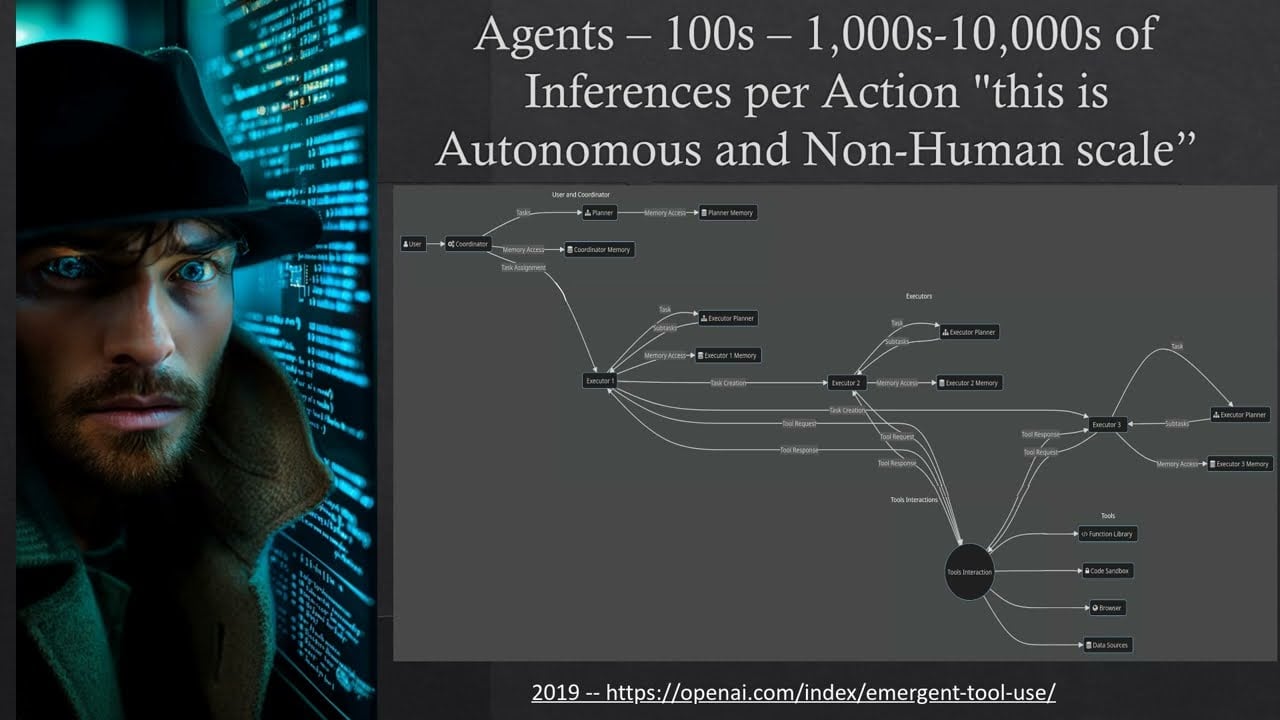

Deploy intelligent agents that autonomously perform complex tasks, make decisions, and take actions across enterprise systems without human intervention.

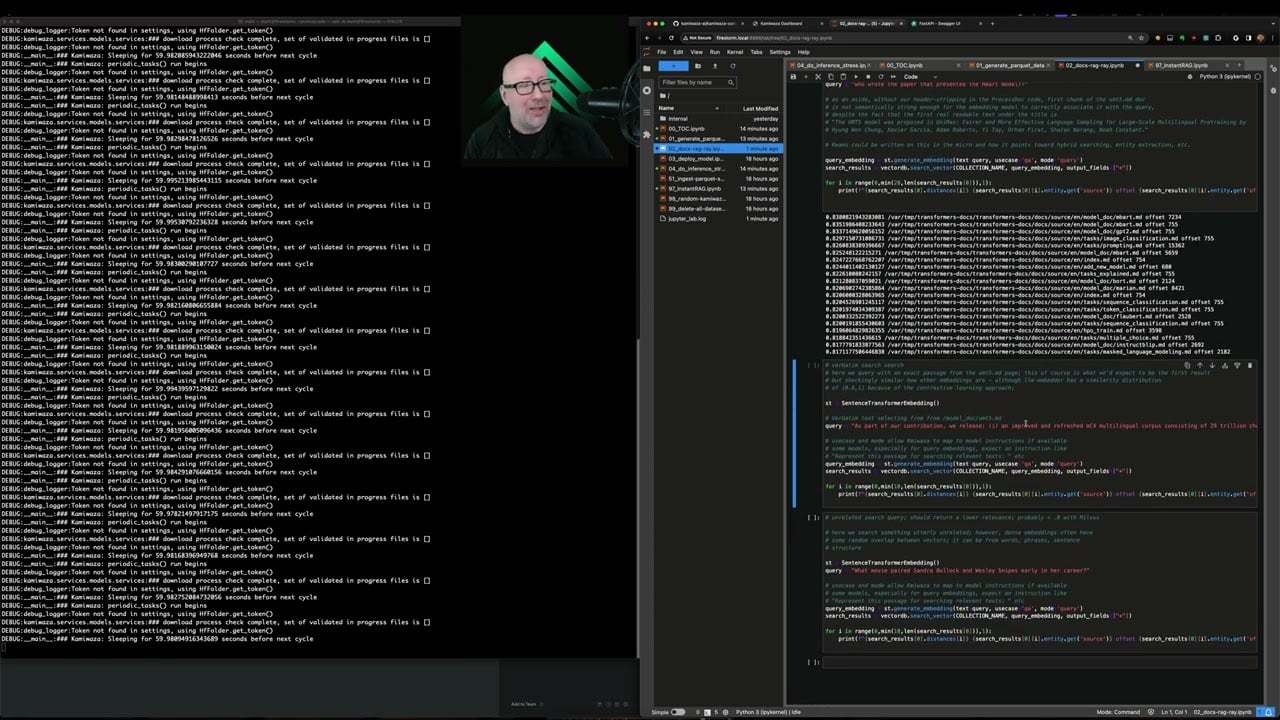

Build comprehensive knowledge graphs across enterprise data sources without centralizing sensitive information. Discover hidden relationships and insights at scale.

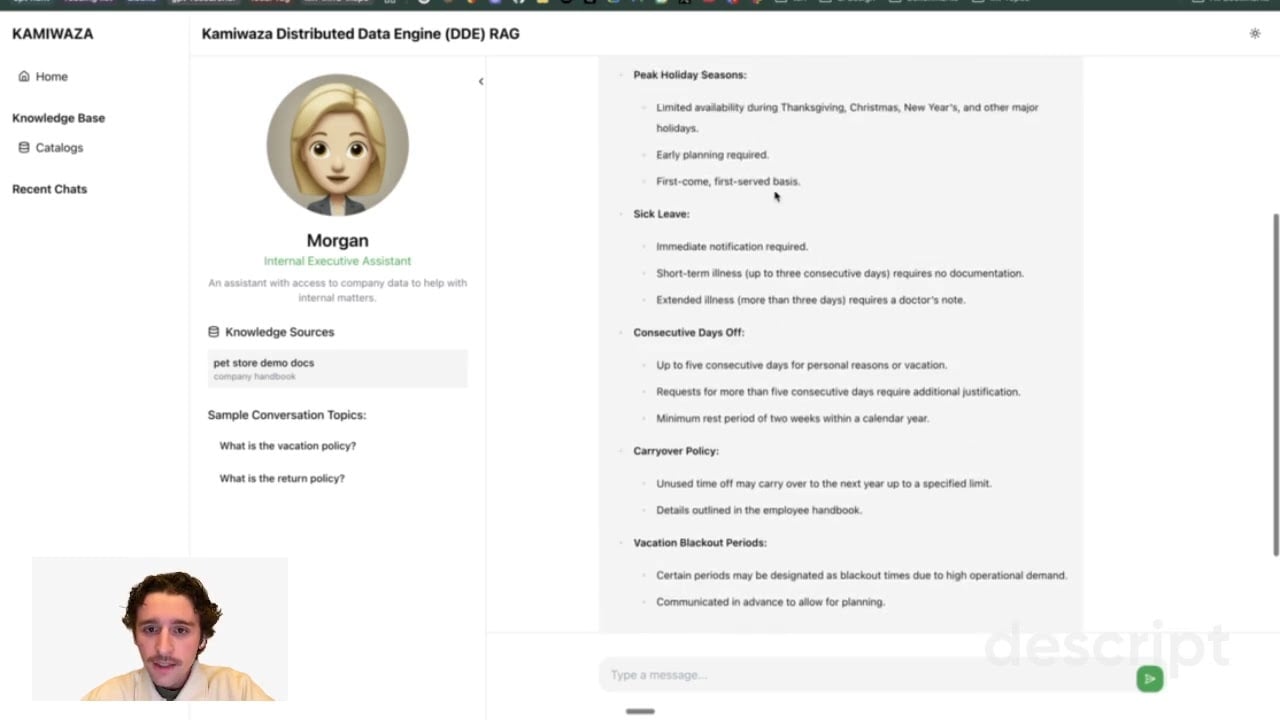

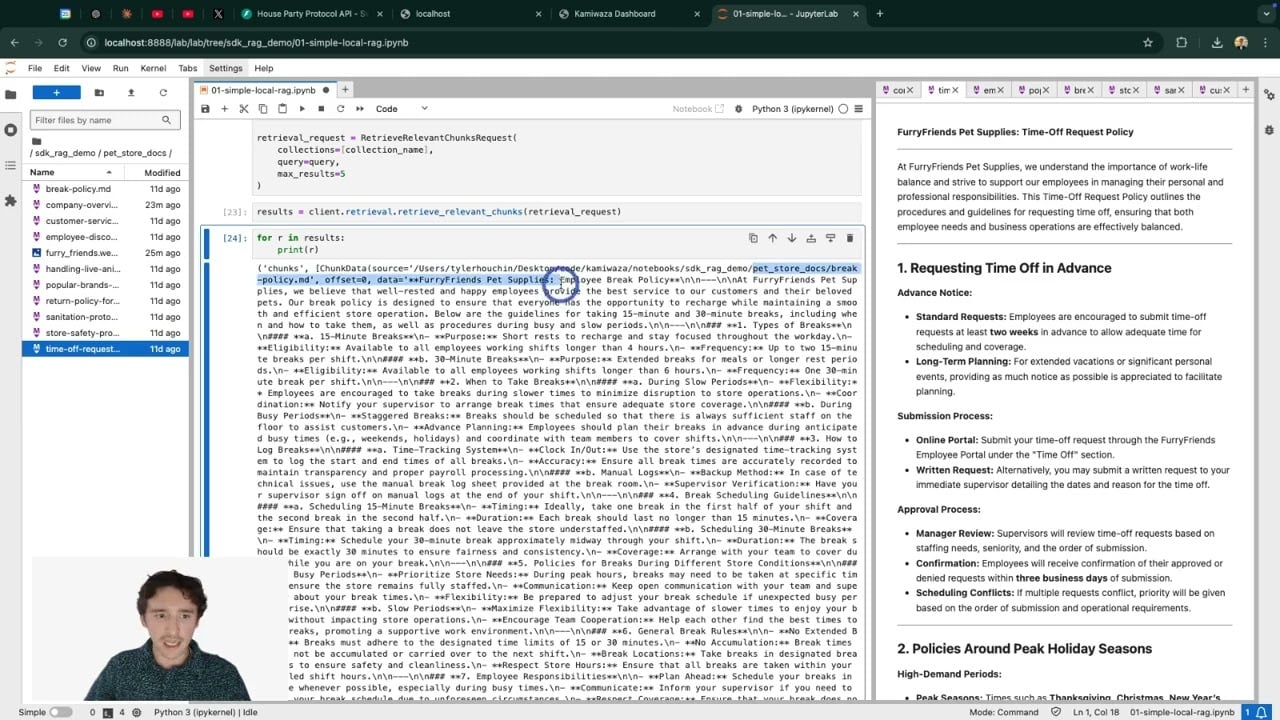

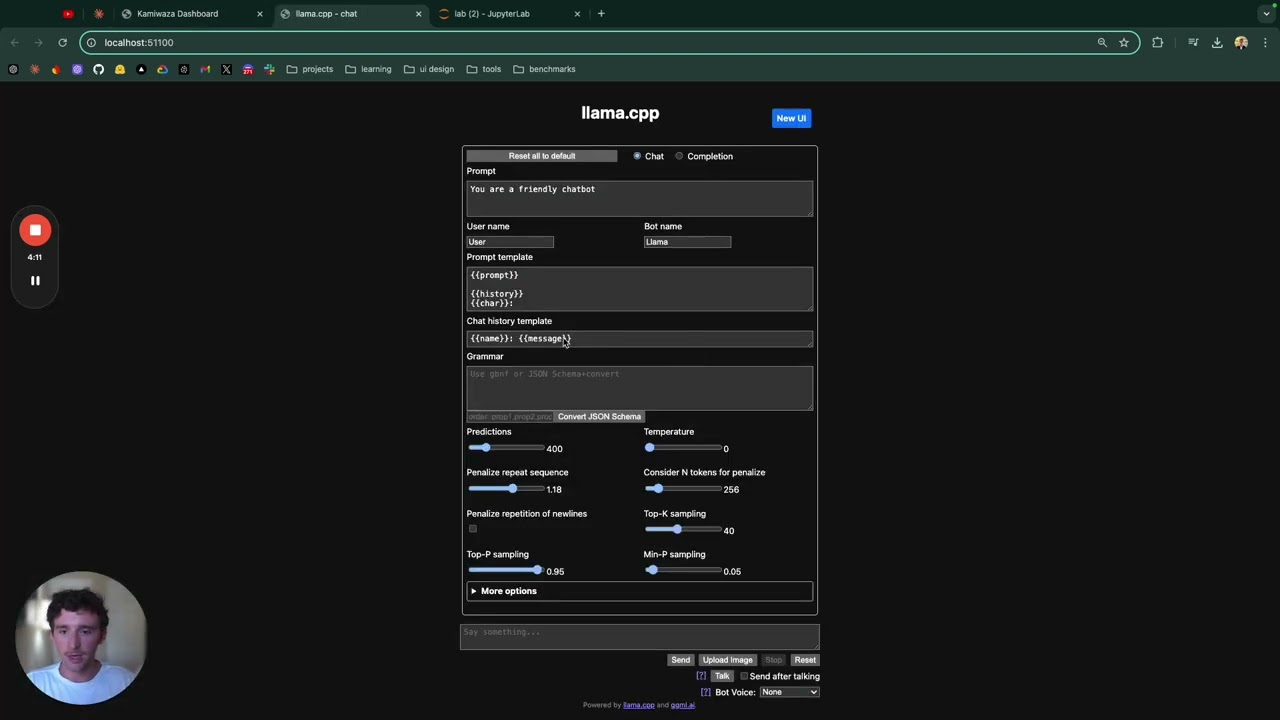

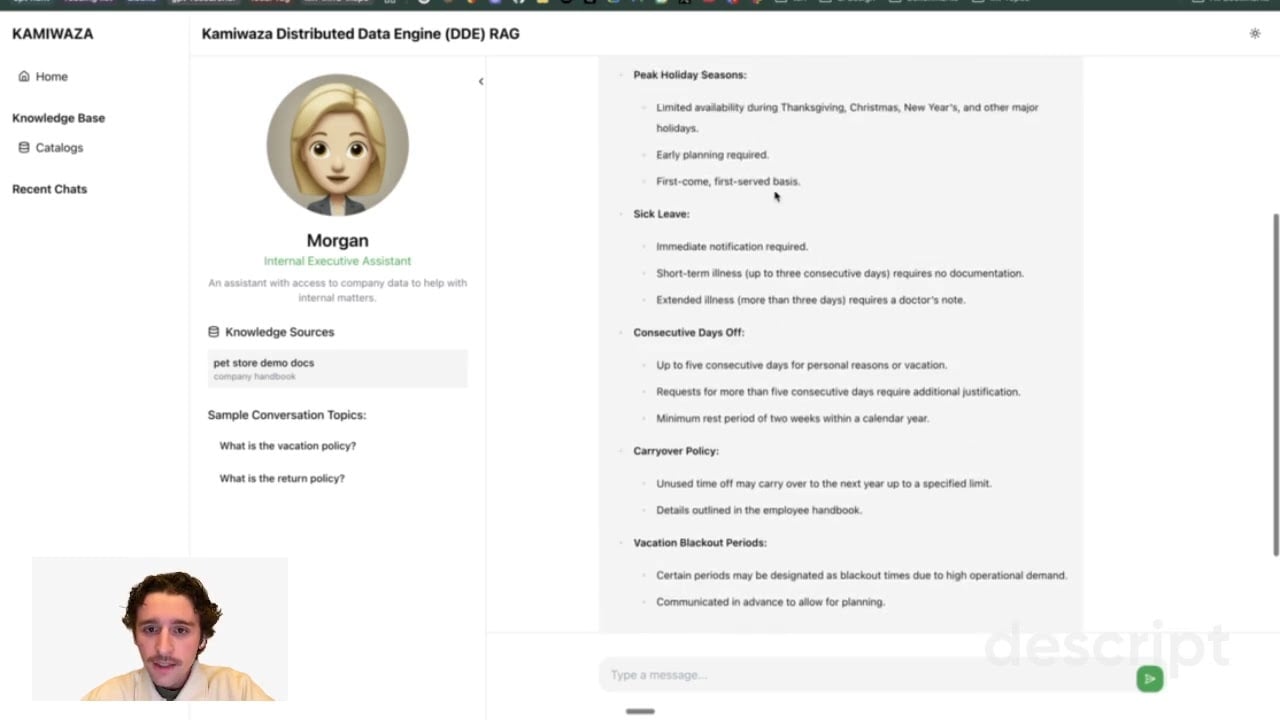

Enable natural language interactions with enterprise data and systems through AI assistants that understand context and provide intelligent responses.

Orchestrate end-to-end business processes spanning multiple systems with AI that adapts to variations and exceptions without breaking.

Extract structured insights from contracts, invoices, and reports across distributed repositories. AI that understands context and relationships, not just text extraction.

Generate insights from distributed datasets without moving sensitive information or compromising compliance requirements.

Success to us means creating measurable impact on your business

Unlike traditional vendors who sell licenses and leave you to figure out implementation, Kamiwaza delivers business outcomes. Each subscription includes outcome-based support where our expert team works directly with you to implement, optimize, and measure AI solutions that deliver real results.

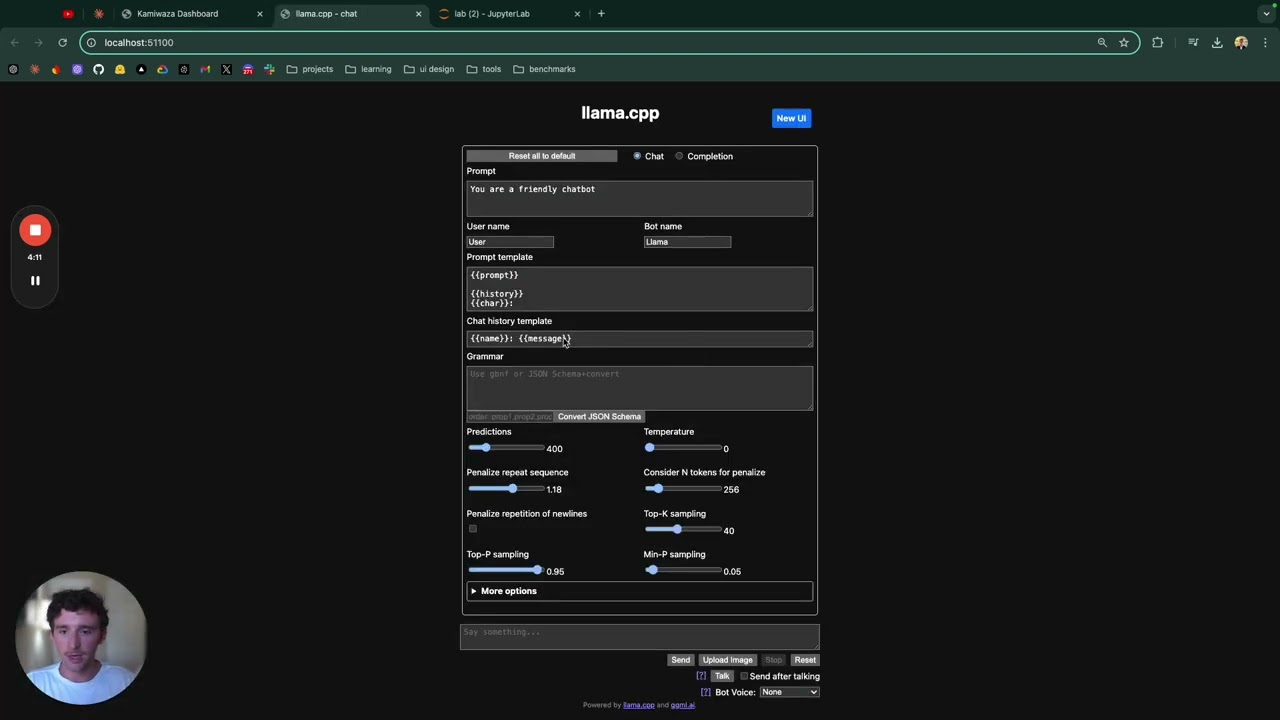

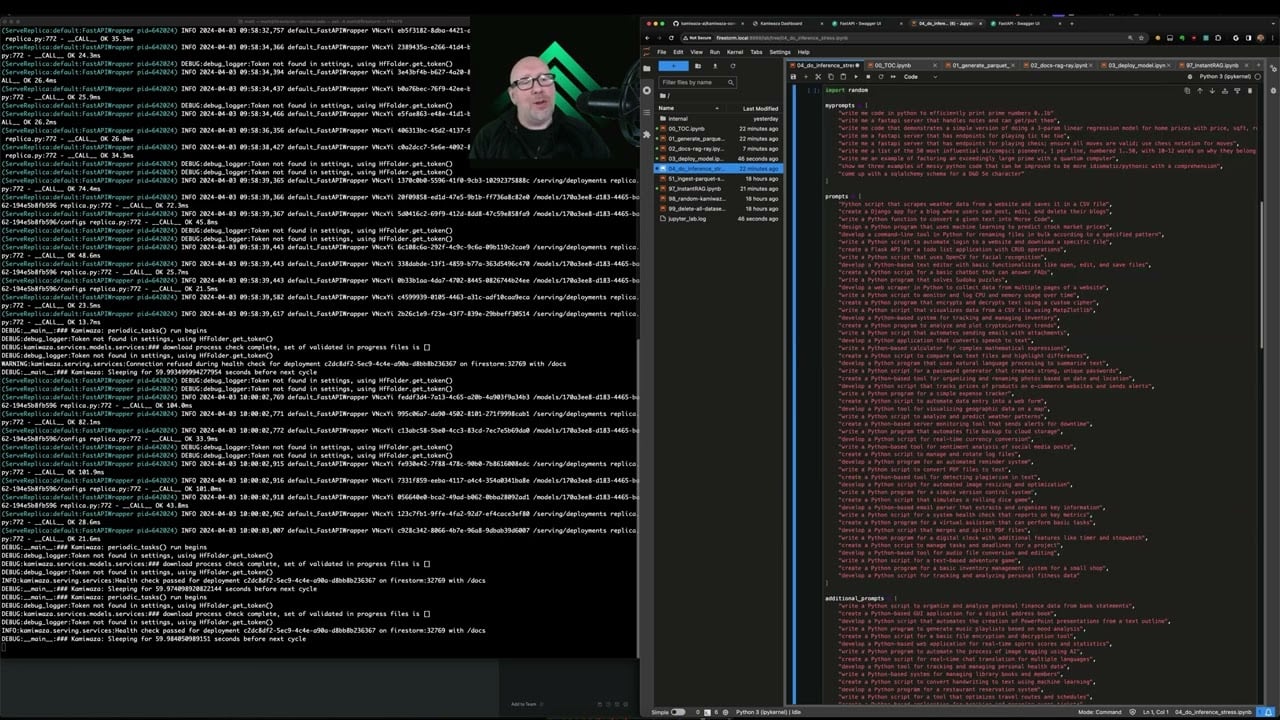

Unlike other AI tools, Kamiwaza meets you where your data lives. On-prem, in the cloud, and even at the edge. Built with Docker for compatibility, and connected with Hugging Face for AI model control, our AI orchestration engine searches your data sources to find the information you need.

No costly centralization, no downtime.

Different prices, same powerful AI orchestration engine. We have plans built to match your pace — no matter where you’re starting from.

The Department of Homeland Security quickly analyzed 97 years of sensitive weather data without breaking security rules. Campbell’s sped up rebate processing by 87% and got rid of nearly all manual errors.

See how organizations like these are solving tough problems with Kamiwaza.

Reach out to schedule a demo, complete an architecture review, and get started.

A highly resourceful, innovative PHP developer with experience.

A highly resourceful, innovative PHP developer with experience.

A highly resourceful, innovative PHP developer with experience.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Maecenas varius tortor nibh, sit amet tempor nibh finibus et. Aenean eu enim justo vestibulum aliquam.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Maecenas varius tortor nibh, sit amet.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Maecenas varius tortor nibh, sit amet.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Maecenas varius tortor nibh, sit amet.

Maecenas varius tortor nibh, sit amet tempor nibh finibus et.

Maecenas varius tortor nibh, sit amet tempor nibh finibus et.

Maecenas varius tortor nibh, sit amet tempor nibh finibus et.